Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation

CVPR 2023

- TTI-Chicago 1

- Purdue University 2

- colab

A diffusion model learns to predict a vector field of gradients. We propose to apply chain rule on the learned gradients, and back-propagate the score of a diffusion model through the Jacobian of a differentiable renderer, which we instantiate to be a voxel radiance field. This setup aggregates 2D scores at multiple camera viewpoints into a 3D score, and repurposes a pretrained 2D model for 3D data generation. We identify a technical challenge of distribution mismatch that arises in this application, and propose a novel estimation mechanism to resolve it. We run our algorithm on several off-the-shelf diffusion image generative models, including the recently released Stable Diffusion trained on the large-scale LAION dataset.

Chain Rule on Score Function

Starting from the assumption that the 3D asset parameter is as likely as its 2D renderings , i.e. , we show the following relationship between 2D scores and the 3D score. Namely, a 3D score should be computed by the Vector-Jaocobian product of 2D score and renderer Jacobian over different camera viewpoints.

The arxiv manuscript uses followed by Jensen's. The detour is not correct and not needed. We will update it in the next release. The form of the Vector-Jacobian product remains the same.

Denoiser's Out of Distribution Problem

To compute the 2D score , a first attempt is to invoke the formula

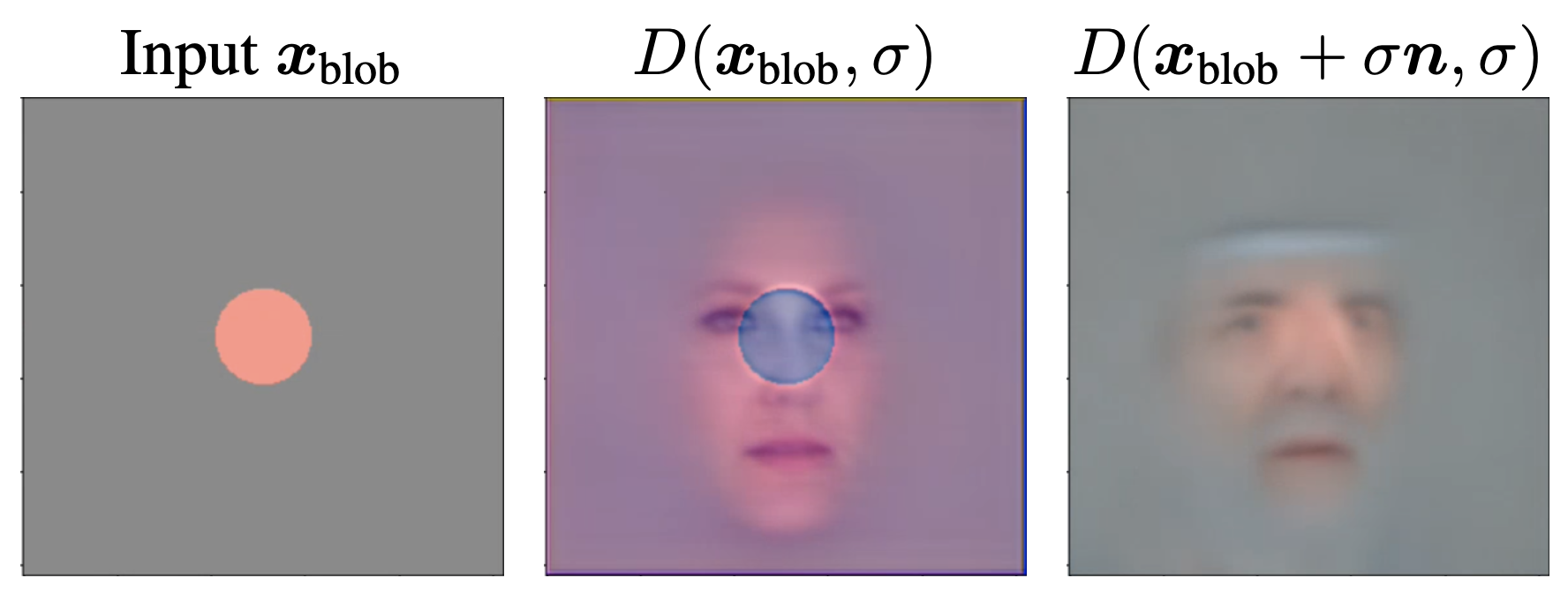

However this leads to an out-of-distribution problem. During training, when conditioned at , the denoiser has only seen noisy input of the form , where . Note that is not just noisy, it is also numerically large. Whereas a rendered RGB image from a 3D scene is capped within , has variance with large numerical range. We illustrate the OOD problem in the figure below.

Fig 2: Illustration of denoiser's OOD issue using a denoiser pretrained on FFHQ. When directly evaluating the model did not correct for the orange blob into a face image. Contrarily, evaluating the denoiser on noised input produces an image that successfully merges the blob with the face manifold. Note that this figure looks very much like SDEdit.

Perturb and Average Scoring (PAAS)

To resolve the OOD issue, we propose Perturb and Average Scoring (PAAS). It computes score on a non-noisy image by perturbing it with noise, and averaging the score computed on each of perturbations.

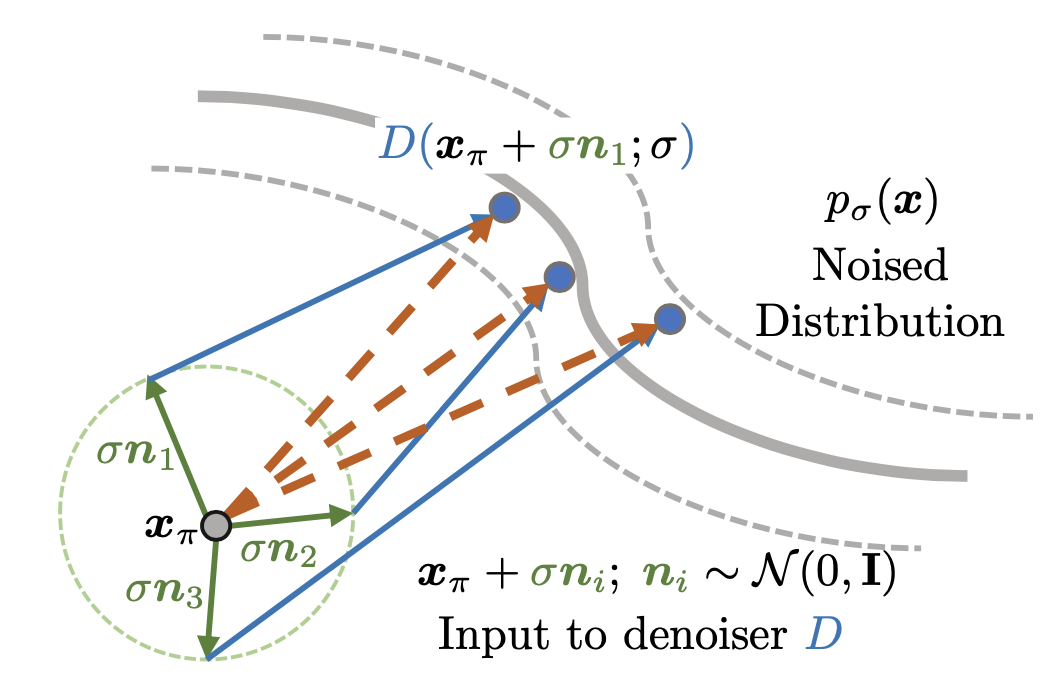

We show that mathematically, PAAS approximates the score on non-noisy input at an inflated noise level of , and we illustrate its intuition in the figure below.

Fig 3: Computing PAAS on 2D renderings . Directly evaluating leads to an OOD problem. Instead, we add noise to , and evaluate dots. PAAS is then computed by averaging over the arrows, corresponding to multiple samples of .

Generalizing to SDEdit Guidance

A nice benefit of writing the PAAS gradient as is that it shows this gradient is merely the loss gradient of current iterate and 1-step inference . If we use a full inference pipeline, then it becomes multiview SDEdit guidance i.e. . It was proposed by SparseFusion, and they call it multi-step denoising.

Relation to DreamFusion

Our formulation including Lemma 1 and Claim 1 in the paper were done before DreamFusion was released on Sept. 29th, 2022. At the time, our team was working on the LSUN Bedroom model, an unconditioned diffusion model by Dhariwal and Nicol; we thought bedrooms contain the most interesting 3D structure and were beyond the capability of 3D GANs. It turns out that making PAAS work on an unconditioned diffusion model is very challenging, even on 2D, especially with the Bedroom model (see Fig 4. of the paper). DreamFusion shows that a language conditioned diffusion model could make use of language forcing (unusually high guidance scale) to make optimization easier by making the image distribution narrower. We are influenced by this insight. That being said, this approach has its drawbacks such as over-saturated colors and limited content diversity per language prompt, and at the moment it is unclear how to deal with diffusion models which are unconditioned.

Acknowledgements

The authors would like to thank David McAllester for feedbacks on an early pitch of the work, Shashank Srivastava and Madhur Tulsiani for discussing the factor on synthetic experiments. We would like to thank friends at TRI and 3DL lab at UChicago for advice on the manuscript. This work was supported in part by the TRI University 2.0 program, and by the AFOSR MADlab Center of Excellence.

Made with ❤ by Yours Truly. Layout inspired by distill.